When Slugging Percentage Beats On-Base Percentage

What’s the single most important offensive statistic? I imagine most of us who have bookmarked FanGraphs would not say batting average or RBIs. A lot of us would name wOBA or wRC+. But neither of those are the types of things you can calculate in your head. If I go to a game, and a batter goes 1-for-4 with a double and a walk, I know that he batted .250 with a .400 on-base percentage and a .500 slugging percentage. I can do that in my head.

So of the easily calculated numbers — the ones you might see on a TV broadcast, or on your local Jumbotron — what’s the best? I’d guess that if you polled a bunch of knowledgeable fans, on-base percentage would get a plurality of the votes. There’d be some support for OPS too, I imagine, though OPS is on the brink of can’t-do-it-in-your-head. Slugging percentage would be in the mix, too. Batting average would be pretty far down the list.

I think there are two reasons for on-base percentage’s popularity. First, of course, is Moneyball. Michael Lewis demonstrated how there was a market inefficiency in valuing players with good on-base skills in 2002. The second reason is that it makes intuitive sense. You got on base, you mess with the pitcher’s windup and the fielders’ alignment, and good things can happen, scoring-wise.

To check, I looked at every team from 1914 through 2015 — the entire Retrosheet era, encompassing 2,198 team-seasons. I calculated the correlation coefficient between a team’s on-base percentage and its runs per game. And, it turns out, it’s pretty high — 0.890. That means, roughly, that you can explain nearly 80% of a team’s scoring by looking at its on-base percentage. Slugging percentage is close behind, at 0.867. Batting average, unsurprisingly, is worse (0.812), while OPS, also unsurprisingly, is better (0.944).

But that difference doesn’t mean that OBP>SLG is an iron rule. Take 2015, for example. The correlation coefficient between on-base percentage and runs per game for the 30 teams last year was just 0.644, compared to 0.875 for slugging percentage. Slugging won in 2014 too, 0.857-0.797. And 2013, 0.896-0.894. And 2012, and 2011, and 2010, and 2009, and every single year starting in the Moneyball season of 2002. Slugging percentage, not on-base percentage, is on a 14-year run as the best predictor of offense.

And it turns out that the choice of endpoints matter. On-base percentage has a higher correlation coefficient to scoring than slugging percentage for the period 1914-2015. But slugging percentage explains scoring better in the period 1939-2015 and every subsequent span ending in the present. Slugging percentage, not on-base percentage, is most closely linked to run scoring in modern baseball.

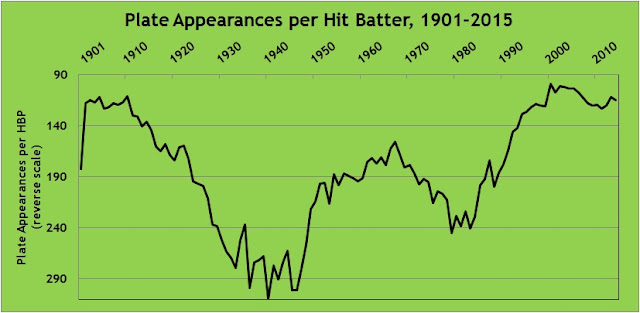

Let me show that graphically. I calculated the correlation coefficient between slugging percentage and scoring, minus the correlation coefficient between on-base percentage and scoring. A positive number means that slugging percentage did a better job of explaining scoring, and a negative number means that on-base percentage did better. I looked at three-year periods (to smooth out the data) from 1914 to 2015, so on the graph below, the label 1916 represents the years 1914-1916.

A few obvious observations:

- The Deadball years were extreme outliers. There were dilution-of-talent issues through 1915, when the Federal League operated. World War I shortened the season in 1918 and 1919. And nobody hit home runs back then. The Giants led the majors with 39 home runs in 1917. Three Blue Jays matched or beat that number last year.

- Since World War II, slugging percentage has been, pretty clearly, the more important driver of offense. Beginning with 1946-1948, there have been 68 three-year spans, and in only 19 of them (28%) did on-base percentage do a better job of explaining run scoring than slugging percentage.

- The one notable exception: the years 1995-1997 through 2000-2002, during which on-base percentage ruled. Ol’ Billy Beane, he knew what he was doing. (You probably already knew that.)

This raises two obvious questions. The first one is: Why? The graph isn’t random; there are somewhat distinct periods during which either on-base percentage or slugging percentage is better correlated to scoring. What’s going on in those periods?

To try to answer that question, I ran another set of correlations, comparing the slugging percentage minus on-base percentage correlations to various per-game measures: runs, hits, home runs, doubles, triples, etc. Nothing really correlates all that well. I tossed out the four clear outliers on the left side of the graph (1914-16, 1915-17, 1916-18, 1917-19), and the best correlations I got were still less than 0.40. Here’s runs per game, with a correlation coefficient of -0.35. The negative number means that the more runs scored per game, the more on-base percentage, rather than slugging percentage, correlates to scoring.

That makes intuitive sense, in a way. When there are a lot runs being scored — the 1930s, the Steroid Era — all you need to do is get guys on base, because the batters behind them stand a good chance of driving them in. When runs are harder to come by — Deadball II, or the current game — it’s harder to bring around a runner to score without the longball. Again, this isn’t a really strong relationship, but you can kind of see it.

The second question is, what does this mean? Well, I suppose we shouldn’t look at on-base percentage in a vacuum, because OBP alone isn’t the best descriptor of scoring. A player with good on-base skills but limited power works at the top or bottom of a lineup, but if you want to score runs in today’s game, you need guys who can slug.

Taking that a step further, if Beane exploited a market inefficiency in on-base percentage at the beginning of the century, might there be a market inefficiency in slugging percentage today? It doesn’t seem that way. First, there’s obviously an overlap between slugging percentage and on-base percentage (i.e., hits), and just hitting the ball hard on contact doesn’t fill the bill if you don’t make enough contact. Recall the correlation coefficient between run-scoring and on-base percentage is 0.89 and between runs and slugging is 0.87. The correlation between run-scoring and pure power, as measured by isolated slugging, is just 0.66. That’s considerably lower than batting average (0.81). ISO alone doesn’t drive scoring.

The second reason there probably isn’t a market inefficiency in slugging percentage is that inefficiencies, by definition, assume that the market as a whole is missing something. In the Moneyball example, other clubs didn’t see the value in Scott Hatteberg and his ilk. It’s harder to believe, fifteen years later, with teams employing directors of baseball systems development and posting for quantitative analysts, that all 30 teams are missing the boat on players who slug but don’t contribute a lot otherwise. Or, put another way, there’s a reason Pedro Alvarez and Chris Carter were non-tendered, and it’s not market inefficiency.