Do Pitchers Keep Defenses on Their Toes?

As a Blue Jays Fan, I’ve enjoyed the opportunity to watch Mark Buehrle pitch the last two years. Getting to see a player with below-average stuff (and that’s probably generous) retire major-league batters regularly is a real treat. On top of that, Mark Buehrle is one of the fastest-paced pitchers in all of baseball. He led all of baseball in 2014 in time between pitches, or pace. He was second in 2013 to teammate R.A. Dickey. He was first again in 2012. Games with Mark Buehrle on the mound move quickly. Often you will hear comments that this has the effect of keeping fielders “on their toes”.

Here’s his manager John Gibbons after a start last September – “He’s a teammate’s dream because he keeps his defence on their toes by working fast.” And here is a quote from Jose Bautista after a start last June – “He’s pitching great, throwing strikes, keeping people off balance and allowing us a chance to play defence behind him. It’s no surprise that every time he pitches there are plenty of good defensive plays made. He keeps everybody engaged in the game because he works quick.”

What Gibbons, Joey Bats, and many others, are saying is that, due to the quicker pace of play, fielders are more ready to react to balls towards them. The implication of this statement is that Mark Buehrle, and other similarly fast paced pitchers, receive better than expected defense, especially on the infield. I’ve often wondered if this belief had any merit so I decided to look into it myself.

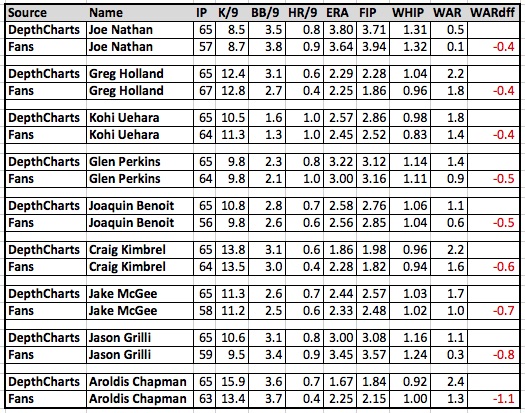

I took a look at the rate at which groundballs hit off of Buehrle have been turned into outs throughout his career and compared his numbers to those of his teammates (Note that I would have liked to include only other starting pitchers from among Buehrle’s teammates but was unable to do so. I wouldn’t expect it to make much of a difference though). These numbers come from baseball-reference.com.

Here is what that data looks like:

We can see that Buehrle’s ability to “keep infielders on their toes” does not translate to more outs on groundballs relative to his teammates in every year. In fact, in only seven of his 14 full seasons has Mark Buehrle’s rate of groundballs converted into outs exceeded those of his teammates. If being a fast-paced pitcher improved the defense behind you then we’d expect to see Mark Buehrle consistently outperform his teammates. Only once in the past five years has this been the case.

That one time in the past five years though, 2012, is interesting. In 2012, Buehrle’s one year with the Miami Marlins, only 41 of the 259 groundballs hit off of Buehrle went for hits. This 82% out rate was well above that of the rest of the team, which stood at 72%. Perhaps we could conclude that the Miami infielders were particularly impacted by Buehrle’s fast pace. This is likely not the case though as the primary shortstop of that team, Jose Reyes, was also the primary shortstop behind Buehrle in 2013 & 2014 with the Blue Jays. In those two years, Buehrle actually had worse infield defense behind him than his teammates (and, sadly, the two worst rates of groundball to out conversion in his career), so it’s likely that Buehrle’s success in 2012 was more due to luck.

This analysis doesn’t consider the average velocity of groundballs hit off of Buehrle compared to his fellow pitchers or anything to do with groundball trajectories, but it seems clear that any defensive advantage Buehrle gains from pitching quickly is minimal at best. Over the course of Buehrle’s 14 complete seasons, groundballs have been converted into outs 75.3% of the time, while the groundballs hit off of his teammates have turned into outs 74.0% of the time. This difference equates to between 5 and 6 extra outs a year. This isn’t a huge advantage, but 5 or 6 extra outs a season and regular two and a half hour games is better than a kick in the teeth.

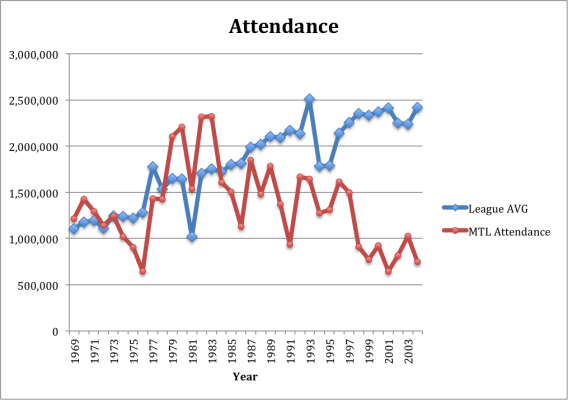

Next, I wanted to see if the ability to “keep fielders on their toes” was seen in pitchers other than Mark Buehrle. I looked at the ten fastest-paced starting pitchers from 2014 (min 100 IP) to see if there was a noticeable increase in groundballs converted into outs when compared to their teammates. I also did the same for the ten slowest-paced starting pitchers. In the fast-paced group are Buehrle, Dickey, Doug Fister, Wade Miley, Jon Niese, Andrew Cashner, Michael Wacha, TJ House, Dan Haren, & Chris Young. In the slow-paced group are plodders Jorge de la Rosa, Yusmeiro Petit, Clay Buchholz, Tyler Skaggs, Edinson Volquez, Chris Archer, Hiroki Kuroda, Masahiro Tanaka, Yu Darvish, & Edwin Jackson (One pitcher had to be excluded from each group as they were traded midseason and therefore exact split data for their teammates was unavailable. These pitchers were David Price from the slow-paced group and Vidal Nuno from the fast-paced group).

The results are below:

Rather than seeing the fast-paced pitchers receiving better groundball defense than their slow-paced peers, we actually see the reverse. Groundballs off the bats of slow-paced pitchers were converted to outs more often than those off of fast-paced pitchers. Once again, this analysis doesn’t consider batted-ball velocity or trajectory, but it seems clear that the supposed benefit of a faster pace doesn’t show up in infield defense. And although the data table above showed that slow-paced pitchers benefited from stronger infield defense, it seems unlikely that this is caused by the slow pace of the pitchers. Rather this is almost certainly statistical noise.

With pace of play concerns becoming more prevalent in baseball these days, there may be some pressure on pitchers to take less time between pitches. If pitchers do make such changes, they shouldn’t expect to receive any stronger defense behind them, even if some may suggest as much. So the next time a broadcaster or anyone applauds a guy for “keeping the defense on its toes” with his fast pace, you can remain skeptical that such a benefit exists. After all, these are major-league ballplayers, many of whom are being paid millions of dollars. I’m sure they can pay attention for an extra ten seconds.