The Home Run Derby and Second Half Production: A Meta-Analysis of All Players from 1985 to 2013

The “Home Run Derby Curse” has become a popular concept discussed among the media and fans alike. In fact, at the time of writing, a simple Google search of the term “Home Run Derby Curse” turns up more than 180,000 hits, with reports concerning the “Curse” ranging from mainstream media sources such as NBC Sports and Sports Illustrated, to widely read blogs including Bleacher Report and FanGraphs, to renowned baseball analysis organizations like Baseball Prospectus and even SABR.

This article seeks to shed greater light on the question of whether the “Home Run Derby Curse” exists, and if so, what is its substantive impact. Specifically, I ask, do those who participate in the “Home Run Derby” experience a greater decline in offensive production in comparison to those players who did not partake in the Derby?

Answering this question is of utmost importance to general managers, field managers, and fans alike. If players who partake in the Derby do experience a decline in offensive production between the first and second halves of the season, those in MLB front offices and dugouts can use this information to mitigate potential slumps. Further, if Derby participation leads to a second half slump, fantasy baseball owners can use this knowledge to better manage their teams. Simply put, knowing the effect of Derby participation on offensive production provides us with a deeper understanding of player production.

The next section of this study will address previous literature concerning the “Home Run Derby Curse,” and will discuss how this project builds upon these studies.

Previous Research

Although a good deal of research has been conducted concerning the “Curse,” the veracity of much of this work is difficult to assess. Many of the previous studies on this issue have used subjective analysis of first and second half production of Derby participants in order to assess the effects of the “Curse” (see Carty 2009; Breen 2012; Catania 2013). Although these works have certainly highlighted the need for further research, they are simply not objective enough to definitively address the question of the “Home Run Derby Curse’s” existence.

To date, the most rigorous statistical analysis of the “Curse” is an article by McCollum and Jaiclin (2010), which appeared in Baseball Research Journal. In examining OPS and HR %, McCollum and Jaiclin found a statistically significant relationship between participation in the Derby and a decline in second half production. At the same time, they examined the relationship between first and second half production in years in which players who had previously participated in the Home Run Derby did not participate, and found no statistically significant drop off in production in those years.

At first glance, this appears to be fairly definitive evidence that the “Curse” is real, however, they also found that players’ production in the first half of the season in years in which they participated in the Derby was substantially higher than in those years in which they did not participate in the Derby. This suggests that players who partake in the Derby are chosen because of extraordinary performances. Based on these finding, McCollum and Jaiclin conjectured that the decline in performance after the Derby for those who participated is likely due to the fact that their performance was elevated in the first half of the season, and the second half decline is simply regression to the mean.

Despite the strong statistical basis of McCollum and Jaiclin’s work, there are a number of points in this work that need to be addressed. First, McCollum and Jaiclin only examine those players who have participated in the Derby and have at least 502 plate appearances in a season, thus prohibiting direct comparison with those who did not participate in the Derby. At the heart of the “Home Run Derby Curse,” however, is the idea that participants in the Derby experience a second half slump greater than is to be expected of any player.

The question that derives directly from this conception is, do Derby participants experience a slump greater than is to be expected from players who did not participate in the Derby? To sufficiently answer this question, players who participated in the Derby must be compared to those who did not. Due to a methodology that relies upon data of only Derby participants, Jaiclin and McCollum were unable to sufficiently answer this question.

Second, McCollum and Jaiclin use t-tests to test their hypotheses. This method is a strong objective statistical approach, however, it is not ideal, as it does not allow for the inclusion of control variables. Thus, there may be additional factors affecting both the relationship between Derby participation and second half production simultaneously, creating a spurious finding. This problem can only be addressed through multivariate regression.

The final issue with McCollum and Jaiclin’s work centers on their theoretical expectations and their measures of offensive production. Theoretical extrapolation is absolutely necessary in statistical work as it informs analysis. Without theoretical expectation, researchers are simply guessing at how best to measure their independent and dependent variables. Very little theoretical explanation of the “Curse” has put forth in previous work on the “Curse,” including McCollum and Jaiclin’s piece, and therefore, their measurement of offensive output is not necessarily best.

This article is an attempt to build upon previous work concerning the “Home Run Derby Curse,” and to address the above issues. In the next section, I will develop a short theoretical framework concerning the “Curse.” Four hypotheses are then derived from this theory, which are tested using expanded data, and a different methodological approach. The results suggest that a “Curse” does exist.

Theoretical Basis of the Home Run Derby Curse

Two main theories have been posited to explain the “Home Run Derby Curse.” First, it has been suggested that participation in the Derby saps players of energy that is necessary to continue to perform well in the second half of the season (Marchman 2010). This theory is summarized well by Marchman who focused on the particular experience of Paul Konerko who went deep into the 2002 Home Run Derby in Milwaukee. He wrote:

The strange experience of taking batting practice without a cage under the lights in front of tens of thousands of people left him sore in places he usually isn’t sore, like his obliques and lower back, the core from which a hitter draws his power. Over the second half of the year, he hit just seven home runs, and his slugging average dropped from .571 to .402.

In essence, this theory argues that players who participate in the Derby experience muscle fatigue in those muscles from which power hitting is drawn. Because these fatigued muscles are imperative for power hitting, players who participated in the Derby experience reduced power, and see a drop in power numbers in the second half of the season. Thus, one can hypothesize:

H1.1 Players who participate in the Derby will see a greater decline in their power numbers than players who do not participate in the Derby.

Furthermore, one might expect that a player will experience greater decrease in energy the more swings he takes during the Derby. The logic underpinning this assertion is that using the power hitting muscles for a longer period of time should fatigue them to a greater extent. Thus, a player who takes 10 swings in the Derby should experience less muscle fatigue than a player who takes 50 swings in the Derby. Following this line of reasoning, one should expect that the Derby has a greater effect on players’ second half power hitting performance when they take more swings during the Derby. Since those players who hit more home runs during the Derby take more swings, it can also be hypothesized:

H1.2: Players who hit more home runs in the Derby will see a greater decline in power numbers in the second half of the season than players who hit fewer home runs in the Derby (including those who do not participate).

The second theory of the “Curse” proposes that participation in the Derby leads to players altering their swings (Breen 2011; Catania 2013). It is thought that this altered swing carries over into the second half of the season, affecting players’ offensive output.

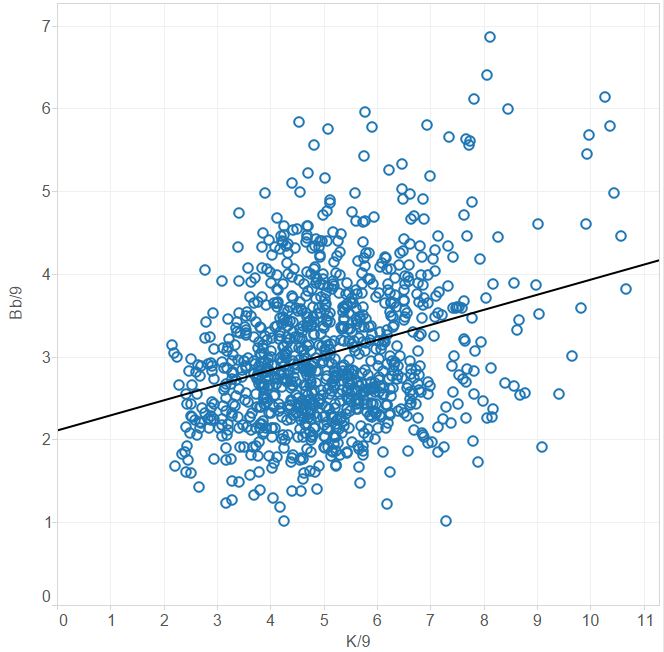

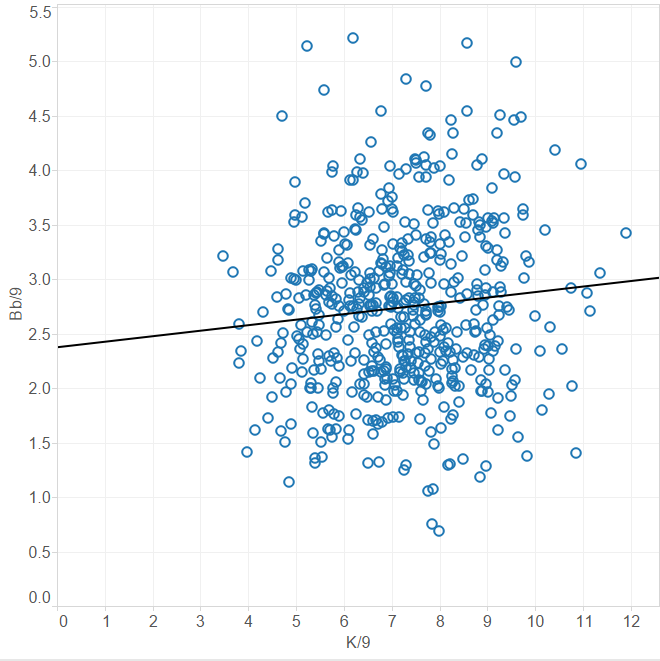

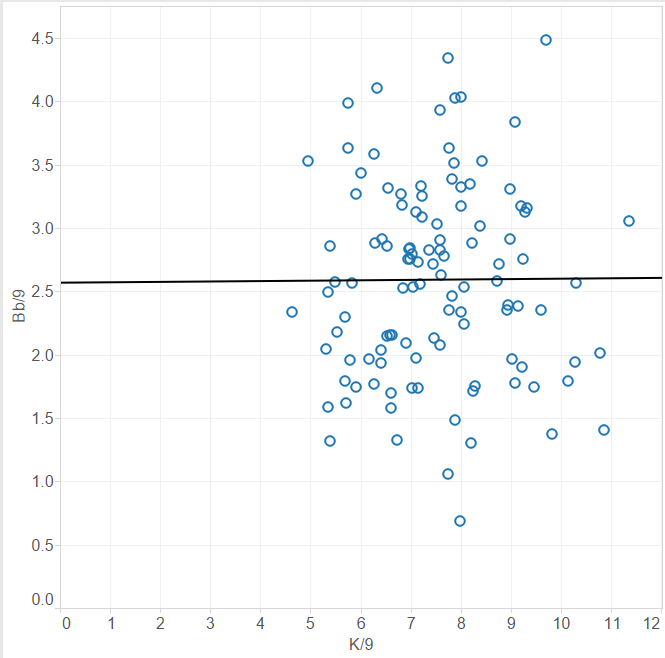

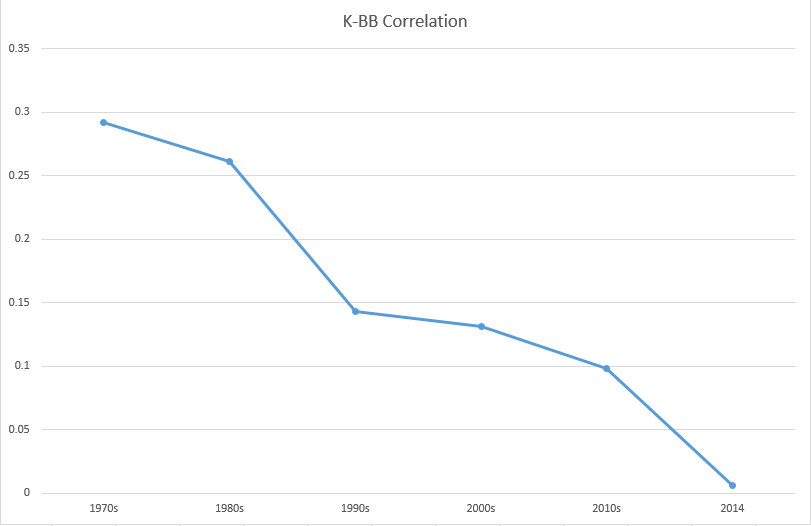

Although most studies of the “Curse” rarely delve into how players tweak their swings, it is likely safe to assume that they are changing their approach in the hope of belting as many homers as possible for that one night – developing an even greater power stroke. It is a commonly accepted conjecture that power and strikeouts are positively correlated (see Kendall 2014), meaning that greater power is associated with more strikeouts. This conjecture may not be as true for exceptionally talented players (i.e. Hank Aaron, Ted Williams, Mickey Mantle, Willie Mays, etc.) However, if we accept this assumption to be correct for the majority of players, it can be stated that if players change their swing to hit more home runs, they should see a corresponding increase in strikeouts in the second half of the season.[i] Thus, it can be hypothesized:

H2.1: Players who participate in the Home Run Derby will experience greater strikeouts per plate appearance in the second half of the season than those who did not participate in the Derby.

As with hypotheses 1.1 and 1.2, it can also be assumed that the effect of participation in the Derby will be greater the more swings an individual takes during the Derby. That is to say, if a player hits more home runs during the Derby, the altered swing he uses during the Derby will be more likely to carry through to the second half of the season. This leads to the hypothesis:

H2.2: Derby participants who hit more home runs in the Derby will experience greater strikeouts per plate appearance in the second half of the season than those who hit fewer home runs in the Derby (including those who do not participate).

In the next section of this study I will discuss the analytical approach, variable operationalization, and the data sources used to address the above four hypothesis.

Data and Analytical Method

Below I will begin with a discussion of the data used in this study. I will then discuss the independent and dependent variables for each hypothesis as well as the control variables used in this study. Finally, I will discuss the methodological approach used in this study.

Data Sources and Structure

Above, it is hypothesized that those players who either participated in the Derby, or performed well in the Derby will see greater offensive decline between season halves than those who either did not participate in the Derby, or struggled in the Derby. In order to properly test these hypotheses one must use data that includes those who participated in the Home Run Derby, and those who did not participate in the Derby.

This paper performs a meta-analysis of all players with at least 100 plate appearances in both the first and second halves of the season from 1985 (the first year in which the Home Run Derby was held) through 2013. This makes the unit of analysis of this paper the player-year. This data excludes observations from 1988 as the Derby was cancelled due to rain. Further, 1994 is also excluded as the second half of the season was cut short due to the players’ strike.

Independent Variables

The main independent variable for hypotheses 1.1, and 2.1 is a dichotomous measure of participation in the Home Run Derby. A player was coded as a 1 if they participated in the Derby and a 0 if they did not participate in the Derby. Between 1985 and 2013 a total of 229 player-years were coded as participating in the Derby.

The independent variable for hypotheses 1.2, and 2.2 is a measure of each player’s success in the Home Run Derby. This is an additive variable denoting the number of home runs each player hit in each year in the Derby. This variable ranges from 0 to 41 (Bobby Abreu in 2005). Those who did not participate in the Derby were coded as 0.[ii]

Dependent Variables

Hypotheses 1.1 and 1.2 posit that participation in the Derby and greater success in the Derby will lead to decreased power numbers respectively. Power hitting can be measured in numerous ways, the most obvious being home runs per plate appearance (HRPA). However, theoretically, if players are being sapped of energy, this should affect all power numbers, not simply HRPA. Restricting one’s understanding of power to HRPA ignores other forms of power hitting, such as doubles and triples. So as not to remove data variance unnecessarily, one can measure change in power hitting by using the difference between first and second half extra base hits per plate appearance (XBPA) rather than HRPA.

Thus, the dependent variable for hypotheses 1.1 and 1.2 is understood as the difference between XBPA in the first and second halves of the season for each player-year. XBPA is calculated by dividing the number of extra base hits (doubles, triples, and home runs) a player has hit by the number of plate appearances, thus providing a standardized measure of extra base hits for each player-year.

The dependent variable for hypotheses 1.1 and 1.2 was created by calculating the XBPA for the first half of the season, and the second half of the season for each player-year. The XBPA for the second half of the season for each player-year was then subtracted from the XBPA for the first half of the season for each player-year. Theoretically, this variable can from -1.000 to 1.000. In reality this variable ranges from -.116308 to .1098476, with a mean of -.0012872, and a standard deviation of .025814.

The dependent variable for hypotheses 2.1 and 2.2 is the difference between first and second half strikeouts per plate appearance (SOPA) for each player-year. SOPA is calculated by dividing the number of strikeouts a player has by his plate appearances, thus providing a standardized measure of strikeouts for each player-year.

The dependent variable for these hypotheses was created by calculating the SOPA for the first half of the season, as well as the second half of the season for each player-year. The SOPA for the second half of the season for each player-year was then subtracted from the SOPA for the first half of the season for each player-year. Theoretically, this variable can from -1.000 to 1.000. In reality this variable ranges from -.1857143 to .1580312, with a mean of -.003198, and a standard deviation of .0378807.

Control Variables

A number of control variables are included in this study. A dummy variable denoting whether a player was traded during the season is included.[iii] To control for the possible effects of injury in the second half of the season, a dummy variable denoting if a player had a low number of plate appearances in the second half of the season is included.[iv] Further, I include a dummy variable measuring whether a player had a particularly high number of first half plate appearances.[v] Finally, controls denoting observations in which the player played the entire season in the National League,[vi] observations that fall during the “Steroid Era,”[vii] observations that fall in a period in which “greenies” were tolerated,[viii] and observations that fall during the era of interleague play are included.[ix]

Analytical Approach

The main dependent variables used to test the above hypotheses are the difference between first and second half XBPA, and the difference between first and second half SOPA. For each of these variables, the data fits, almost perfectly, a normal curve.[x] For each of these variables, the theoretical range runs from 1 to -1, with an infinite number of possible values between. Although these variables cannot range from infinity to negative infinity, the most appropriate methodological approach for this study is OLS regression.

In the next section of this piece, I will report the findings of the tests of hypotheses 1.1 through 2.2. I will then discuss the implications of these findings.

Analysis

This section will begin with the presentation and the discussion of the findings concerning hypotheses 1.1 and 1.2. I will then present and discuss the findings of tests of hypothesis 2.1 and 2.2.

Analysis of Hypotheses 1.1 and 1.2

Column 1 of table 1 shows the results of the test of hypothesis 1.1. The intercept for this test is -.0007, but is statistically insignificant. This suggests that, with all variables included in the test held at 0, players will see no change in their XBPA between the first and second halves of the season. The coefficient for the “Derby participation” variable shows a statistically significant coefficient of .008. This means, if a player participates in the Derby, he can expect to see his second half XBPA drop by .008.

Of course, there is a possibility that those who participate in the Derby will see a greater drop in their XBPA than the average player because, in order to be chosen for the Derby, a player will have a higher XBPA.[xi] This would then make it more likely that players who participate in the Derby see a greater drop in XBPA than players who do not participate as they regress to the mean. To account for this, the sample can be restricted to players with a high first half XBPA.

The mean first half XBPA for all players (those who do and do not participate in the Derby) between 1985 and 2013 is .0766589. The sample is restricted to only those players above this mean. This is done in for the tests displayed in column 2 of table 1. As can be seen, the intercept is statistically significant, with a coefficient of .01. Those who have average or above average XBPA in the first half of the season can expect to see their XBPA drop by .01 after the All-Star Break when all other variables in the model are held equal.

Table 1: The Effect of Home Run Derby Participation on the Difference in XBPA.

| Full Sample | XBPA > .0766589 | XBPA > .1138781 | |

| Derby Participation | .008***(.002) | .002(.002) | -.004(.003) |

| Trade | .001(.001) | .002(.002) | .007(.006) |

| Diminished PAs | -.005***(.001) | -.002**(.001) | .002(.001) |

| High 1st Half ABs | -.001(.001) | -.006***(.001) | -.008**(.003) |

| National League | .0003(.001) | -.001(.001) | .0002(.002) |

| Steroids | -.001(.002) | -.001(.002) | .004(.005) |

| Greenies | .004**(.002) | .003(.002) | .003(.002) |

| Interleague | .002(.002) | .00003(.002) | -.01(.005) |

| Intercept | -.0007(.002) | .01***(.002) | .04***(.005) |

| N | 7,330 | 3,904 | 636 |

Note: Values above represent unstandardized coefficients, with standard errors in parentheses. *p<.05, **p<.01, ***p<.001

Turning to the Derby participation variable, one notices that it is now statistically insignificant with a coefficient of .02. When restricting the sample to only those who showed average or above average power in the first half of the season, the results show that those who participate in the Derby will see no statistically discernible difference in their power hitting when compared to those who did not participate in the Derby.

The variables denoting if a player had a low number of plate appearances in the second half of the season, or a high number of at-bats in the first half of the season, are statistically significant and both present with negative coefficients. Meaning if a player has a high number of at-bats in the first half of the season or if he has a low number of plate appearances in the second half of the season, he will actually see an increase in XBPA.

Although the results in column 2 of table 1 are telling, it may be useful to restrict the sample even further. Those who are selected for the Derby are, for all intents and purposes, the best power hitters in baseball. Therefore, one can restrict the sample to only the best power hitters and compare only those players with a first half XBPA equal to, or above the average for those who participated in the Derby, while, of course, keeping all Derby participants in the sample.

The mean first half XBPA for Derby participants between 1985 and 2013 is .1138781. Tests restricting the sample to only those with a first half XBPA of .1138781 are displayed in column 3 of table 1. The intercept for these tests is statistically significant and shows a coefficient of .04. Meaning those with a first half XBPA of .1138781 can expect to see a drop of .04 in their XBPA after the All-Star Break. The coefficient for the variable measuring participation in the Derby is -.004, but is statistically insignificant. This suggests that those who participate in the Derby do not see a marked decrease in their power hitting after the Derby when compared to those of similar power hitting prowess.

The only variable that shows a statistically significant effect in these tests is that which denotes whether a player had a high number of first half at-bats. As with previous tests, the coefficient for this variable is negative. This suggests that players who have a high number of first half at-bats see an increase in the XBPA between the first and second halves of the season in comparison to those without a high number of first half at-bats.

Columns 1 through 4 in table 2 show the tests of success in the Derby (the number of home runs hit) on the difference between first and second half XBPA. The test with a full sample is displayed in column 1 of table 2. The intercept for this test is statistically insignificant, suggesting that on average, players do not experience a marked change in their XBPA between the first and second halves of the season.

The variable denoting the number of home runs a player hit during the Derby is statistically significant and has a coefficient of .0003. This means that for every home run a participant in the Derby hit he can expect his XBPA after the All-Star Break to decline by .0003 points.

Of course, the relationship between Derby success and the difference in first half and second half XBPA is not likely to be linear, but rather curvilinear. Thus, a measure of home runs hit during the Derby squared should be included. The test including this variable is displayed in column 2 of table 2. The intercept is again statistically insignificant suggesting that when all variables in the model are held at 0, players should not see a marked change in their XBPA between the first and second half of the season.

Table 2: The Effect of the # of Home Runs Hit in the Derby on the Difference in XBPA.

| Full Sample | Restricted Samples | |||

| Without HR^2 | With HR^2 | XBPA>.0766589[xii] | XBPA>.1138781[xiii] | |

| Home Run Total | .0003*(.0002) | .001**(.0004) | .00002(.0002) | -.00002(.0002) |

| Home Runs Squared | . | -.00004*(.00002) | . | . |

| Trade | -.001(.001) | -.001(.001) | .002(.002) | .007(.002) |

| Diminished PAs | -.005***(.001) | -.005***(.001) | -.002**(.002) | .002(.002) |

| 1st Half ABs | .001(.001) | .001(.001) | -.006***(.001) | -.008***(.002) |

| National League | .0003(.001) | .0003(.001) | -.001(.001) | .0002(.002) |

| Steroids | -.001(.002) | -.001(.002) | -.001(.002) | .004(.005) |

| Greenies | .004**(.002) | .004**(.002) | .003(.002) | -.003(.005) |

| Interleague | .002(.002) | .002(.002) | -.00003(.002) | -.009(.005) |

| Intercept | -.001(.002) | -.001(.002) | .01(.002) | .04***(.006) |

| N | 7,330 | 7,330 | 3,898 | 636 |

Note: Values above represent unstandardized coefficients, with standard errors in parentheses. *p<.05, **p<.01, ***p<.001

The effect of success in the Derby remains statistically significant with a coefficient of .001. This means that with each home run a player hits in the Derby his XBPA in the second half of the season will decline by .001. Further, the variable “home runs squared” is statistically significant, and has a coefficient of -.00004. This indicates that the effect of the number of home runs a player hits in the Derby on second half production decreases with more home runs. In essence, hitting 40 home runs during the Derby does not have the same effect on second half offensive production as hitting 30 home runs during the Derby, and so on.

In terms of control variables, the variable denoting a high number of first half at-bats is statistically significant with a negative coefficient in the tests reported in column 1 of table 2. Further, in the tests reported in column 2 the variable denoting a diminished number of plate appearances in the second half of the season is statistically significant and negative.

As with the tests reported in table 1, restricting the sample to only those players with average and above average first half XBPA may be useful. Column 3 of table 2 shows the results of the test of the effect of success in the Derby on the difference in XBPA between the two halves of the season when the sample is restricted to those with a first half XBPA at or above the leagues’ average (.0766589).

The intercept in this test is statistically significant with a coefficient of .012. This means that, when all variables included in this test are held at 0, players with an average or above average first half XBPA notice a decline in the second half XBPA. Importantly, the effect of the number of home runs hit during the Derby is statistically insignificant, meaning that hitting more home runs during the Derby has no statistical effect on the difference between first half and second half XBPA when the sample is restricted to those with average or above average first half XBPA.

Both the variable denoting whether a player had diminished second half plate appearances, and the variable denoting whether a player had a high number of first half at-bats, are statistically significant with negative coefficients. This implies that those who experience a diminished number of second half plate appearances, and those players with a high number of first half at-bats see an increase in their XBPA between the first and second halves of the season.

Column 4 of table 2 restricts the sample based on the mean first half XBPA of those who participated in the Derby. The mean first half XBPA of these players is .1138781. The intercept for this test is statistically significant with a coefficient of .036. The variable measuring success in the Derby is statistically insignificant, meaning that the number of home runs a player hits in the Derby has no statistical effect on the difference between first and second half XBPA when comparing Derby participants to similar power hitters who did not participate in the Derby.

In terms of controls, the variable denoting whether a player had a high number of first half at-bats is again statistically significant with a negative coefficient. This, as with previous tests, suggests that those who have a high number of first half at-bats will experience an increase in XBPA between the first and second halves of the season.

Analysis of Hypotheses 2.1 and 2.2

The results of the tests of hypothesis 2.1 (participation in the Derby will lead to more strikeouts per plate appearance) are displayed in column 1 of table 3. This column shows the relationship between participation in the Derby and the change in SOPA between the first and second halves of the season. As can be seen, the intercept is -.006 and is statistically significant, meaning, all other things equal, players strikeout more often in the second half of the season.

The coefficient for “Derby participation” is -.005 and is statistically significant, meaning that those who participate in the Home Run Derby will see their second half SOPA increase by .005 between halves of the season in comparison to players who do not participate in the Derby. When one takes into account that SOPA should increase by .006 when all other variables are held at 0, this finding suggests that Derby participants should see an increase of .011 in their SOPA between the first and second halves of the season.

Unlike XBPA, there is very little chance that SOPA is associated with selection for the Home Run Derby. Moreover, the average first half SOPA for the entire sample used in this study is .1610312, whereas the mean first half SOPA for those who participated in the Home Run Derby is .1669383. Those who participated in the Derby were actually more likely to strikeout in any given plate appearance than those who did not participate in the Derby. Essentially, assuming that one should see a regression to the mean, it is more likely that those who participate in the Derby would see a decrease in SOPA between the first and second halves of the season. These results, however, tell the opposite story, and cannot be explained by a mere statistical anomaly. Therefore, it is unnecessary to restrict the sample, and one can state that hypothesis 2.1 is supported.

Turning to column 2 of table 3, one sees a test of hypothesis 2.2 (the more home runs a player hits in the Derby the smaller the difference between his first and second half SOPA will be). Much like the results in column 1 of table 3, the intercept is -.006 and is statistically significant. Thus, when holding all other variables at 0, one can expect the difference between a player’s first and second half SOPA to increase by .006.

The coefficient for the variable denoting the total number of home runs a player hit during the Derby shows a statistically significant coefficient of -.0005. For every home run a player hit during the Derby, the difference between his first half SOPA and second half SOPA will decrease by .0005.

This relationship, however, is likely curvilinear. In order to account for this likelihood I include a variable in which the total number of home runs a player hit during the Derby is squared. Column 3 of table 3 reports the results of a test including a measure of the total number of home runs squared. When this variable is included the coefficient of the total number of home runs becomes statistically insignificant. This suggests that the total number of home runs a player hit during the Derby is not related to the difference in that player’s first half SOPA and second half SOPA. It must be noted, however, that this finding does not negate the result in column 1 of table 3.

Interestingly, there are a number of control variables that show statistical significance in all tests included in table 3. If a player was traded midseason, he can expect to see the difference between his first half SOPA and second half SOPA to shrink, meaning he will see an increase in SOPA in the second half of the season. Further, having a below average number of plate appearances in the second half of the season leads to a decrease in second half SOPA.

class=Section9>

Table 3: The Effect of Home Run Derby Participation and the # of Home Runs Hit in the Derby on the Difference in SOPA.

| Participation in Derby (1=Participation) | Number of Home Runs Hit in Derby | ||

| . | Without HR^2 | With HR^2 | |

| Derby Participation/Home Run Total | -.005*(.003) | -.0005*(.0002) | -.00001(.0002) |

| Home Run Total Squared | . | . | -.00002(.00002) |

| Trade | -.004*(.002) | -.004*(.002) | -.005*(.002) |

| Diminished PAs | .005***(.001) | .005***(.001) | .005***(.001) |

| High 1st Half ABs | .002*(.001) | .002*(.001) | .002(.001) |

| National League | .002(.001) | .002(.001) | .002(.001) |

| Steroids | .002(.002) | .002(.002) | .002(.002) |

| Greenies | .0003(.002) | .0003(.002) | .0004(.002) |

| Interleague | -.002(.002) | -.001(.002) | -.001(.002) |

| Intercept | -.006*(.003) | -.006*(.003) | -.007*(.003) |

| N | 7,330 | 7,330 | 7,330 |

Note: Values above represent unstandardized coefficients, with standard errors in parentheses. *p<.05, **p<.01, ***p<.001

The next section of this paper will first place the main findings of this paper in a broader context of the “Home Run Derby Curse.” It will then discuss possible avenues for further research.

Implications

The results above were mixed. In some instances, participation in the Derby, or success in the Derby, was statistically related to second half offensive decline, whereas in other tests, there was no relation between participation in the Home Run Derby and changes in offensive production between season halves. When using the full sample (N=7,330), the results showed that Derby participants can expect to see a greater drop in their XBPA between halves of the season than those who did not participate in the Derby. Moreover, those who have greater success in the Derby will see a greater drop in their XBPA between the first and second halves of the season in comparison to those who have not had as much success in the Derby. Further, the results showed, when using the full sample of players, those who participated in the Derby, as well as those who had greater success in the Derby, will, on average, expect to see their second half SOPA increase more than Derby non-participants.

These findings, however, must be discussed in closer detail. As McCollum and Jaiclin (2010) pointed out in their piece, some of these results may be due to the often extraordinary performances of Derby participants in the first half of the season, and any decline is simply a regression to the mean.

In order to address this issue, in testing the effect of Derby participation and success on change in XBPA, I restricted the sample to those who showed above-average and extraordinary performances in the first half of the season. The effect of Derby participation and success on change in XBPA disappeared when the sample was restricted to those who showed average or above-average first halves. This suggests that hypotheses 1.1 and 1.2 are not confirmed, and lends support to McCollum and Jaiclin’s regression to the mean conjecture.

Turning towards the relationship between Derby success and change in SOPA between halves, an effect was initially found. This suggests that those who hit more home runs during the Derby tend to see an increase in their second half SOPA in comparison to their first half SOPA. This relationship, however, evaporates when a measure of home runs squared in included. This suggests a lack of robustness to this finding, and thus hypothesis 2.2 cannot be confirmed.

Based upon these findings, it appears that the “Home Run Derby Curse” is more of a Home Run Derby myth. The results concerning Derby participation and SOPA, however, appear to tell a different story. The test of hypothesis 2.1 shows that those who participate in the Derby see a larger increase in their SOPA between halves of the season compared to players who do not participate in the Derby. As stated above, it is unnecessary to restrict the sample based upon first half SOPA because those who participate in the Derby have, on average, a higher first half SOPA than the full sample mean. Thus, the argument that Derby participants have had an exceptionally strong first half does not apply in the case of SOPA.

Simply put, derby participants do see a statistical increase in their SOPA in comparison to non-participants, suggesting that there is some credence to the “Home Run Derby Curse,” and, it is caused by players changing their swings. The question that remains, however, is what is the substantive impact of participation in the Derby on SOPA?

The Substantive Effect of Derby Participation on SOPA

Essentially, Derby participants can expect to see their second half SOPA increase by .005 more points over their first half SOPA than those players who do not participate in the Derby. The average first half SOPA for those who did not participate in the Derby is .1608855. The mean number of first half plate appearances for the sample used in this study, excluding those who participated in the Derby, is 249.5692. This means that the average Derby non-participant will strikeout about 41 times in the first half of the season.

With all variables in the model held equal, the average second half SOPA will be .006 points higher than the average first half SOPA, about .1668855. The mean number of second half plate appearances for the sample used in this study, excluding those who participated in the Home Run Derby, is 219.5873. Therefore, an average Derby non-participant will strikeout about 37 times in the second half of the season. When the first half and second half are combined, an average player who did not participate in the Home Run Derby can expect to strikeout about 78 times.

The mean first half SOPA for a Derby participant is .1669383. The average number of first half plate appearances for Derby participants is 356.9345. Thus, the average Derby participant can expect to strikeout about 60 times in the first half of the season.

The mean second half SOPA for a Derby participant can be understood as:

2nd Half SOPA = .1669383+(-α)+(-β)

Where α is the intercept (-.006) and β (-.005) is the coefficient for participation in the Derby. All other variables held constant at 0, Derby participants can expect a second half SOPA of .1779383. The average number of second half plate appearances for Derby participants is 291.7209. With all variables other than “Derby participation” held equal at 0, those who participate in the Derby can expect about 52 strikeouts in the second half of the season. This suggests that a Derby participant can expect to strike out 112 times during the season.

This is a substantial difference in strikeouts, however, in order to accurately assess the true substantive effect of Derby participation, one must utilize a common number of plate appearances across both Derby participants and non-participants alike. For the purposes of this paper, I make the reasonable assumption that players will have about 300 plate appearances in the second half of the season.

Using 300 plate appearances, those who did not participate in the Derby can expect 50 strikeouts in the second half of the season, whereas those who did participate in the Derby can expect 53 strikeouts in the second half of the season.[xiv] This difference of three strikeouts does not seem substantively large.

Further, it must be noted that the coefficient for Derby participation of -.005 is only an estimate with a 95% confidence interval ranging from -.0002 to -.01. If the true coefficient is -.01, this would amount to about 5 more strikeouts over 300 plate appearances in the second half of the season. If the true coefficient is -.0002, a player who participates in the Derby could expect, all other things held equal, to strikeout 2 more times over 300 plate appearances in the second half of the season than a player who did not participate. In essence, the difference in SOPA between the halves of the season due to participation in the Derby is statistically significant, but substantively negligible.

Broader Implications and Future Research

Although the effects of Derby participation on SOPA are substantively minimal, the take away point of this study is that a “Home Run Derby Curse” does exist. Further, the confirmation of H2.1 suggests that Derby participants are altering their swings to develop more power during the Derby, and this is affecting their swing in the second half of the season.

Regardless of the substantive effects, this is an important finding. If a Derby participant’s swing is altered so greatly that they begin striking out at an even faster rate than non-participants in the second half of the season, the question that we must ask is, what other effects does this altered swing have? Does it increase a Derby participant’s flyball ratio? Are Derby participants more likely to see a drop in batting average and walks?

Beyond these questions, future research into the “Curse” should also focus on how the Derby alters a player’s swing. One possible avenue for future research lies in measuring changes in a hitter’s stance (i.e. distance between their feet, angle of their back elbow, etc.) after the Derby relative to a player’s stance prior to the Derby.

Works Cited:

J.P. Breen, “The Home Run Derby Curse,” FanGraphs, July 11, 2012, accessible via http://www.fangraphs.com/blogs/the-home-run-derby-curse/.

Jason Catania, “Is there Really a 2nd-Half MLB Home Run Derby Curse?,” Bleacher Report, July 15, 2013, accessible via http://bleacherreport.com/articles/1702620-is-there-really-a-second-half-mlb-home-run-derby-curse.

Derek Carty, “Do Hitters Decline After the Home Run Derby?,” Hardball Times, July 13, 2009, accessible via http://www.hardballtimes.com/do-hitters-decline-after-the-home-run-derby/.

Evan Kendall, “Does more power really mean more strikeouts?,” Beyond the Box Score, January 12, 2014, accessible via http://www.beyondtheboxscore.com/2014/1/12/5299086/home-run-strikeout-rate-correlation.

Tim Marchman, “Exploring the impact of the Home Run Derby on its participants,” Sports Illustrated, July 12, 2010, accessible via http://sportsillustrated.cnn.com/2010/writers/tim_marchman/07/12/hr.derby/.

Joseph McCollum, and Marcus Jaiclin. 2010. “Home Run Derby Curse: Fact or Fiction?,” Baseball Research Journal 39(2).

[i] It could be argued that those players who participate in the Derby are also exceptional players, and therefore, this conjecture will not be correct for the majority of those who participate in the Derby. At first glance, this would appear to create a problem for the analysis in this piece, however, this is not so. This would only present a problem if being exceptional led to a greater positive correlation between a power swing and strikeouts, that is if a power stroke for exceptional players leads to more strikeouts than a power stroke for average players. If exceptional hitters are less likely to have a positive correlation between power and strikeouts, and Derby participants are exceptional players, we would expect to see a lower strikeout rate among these players when they begin attempting to hit for greater power. Essentially, a violation of this assumption leads to a more conservative measurement.

[ii] Some players who participated in the Derby were coded as “0,” as they did not hit any home runs.

[iii] A player is coded as a 1 if he was traded during the season and a 0 if he was not traded.

[iv] A player is coded as a 1 if the difference in his plate appearances between the first and second halves of the season (Pre All-Star Break PAs – Post All-Star Break PAs) is greater than the observed average in the data (39.8). A player is coded as a 0 if the difference in his plate appearances between the first and second halves of the season is less than the observed average in the data.

[v] A player is coded as a 1 if the number of plate appearances he had during the first half of the season is greater than the observed average in the data (342).

[vi] This variable is a dummy variable, with a player being coded as a 1 if he spent the entire season in the National League, and a 0 if he did not spend the entire season in the National League.

[vii] Although the “Steroid Era” is somewhat difficult to nail down, for the purposes of this paper, it is assumed to run from 1990 through 2005. Therefore, if an observation is in or between 1990 and 2005 it is coded as a 1. If an observation falls outside of this time period it is coded as a 0.

[viii] For the purposes of this paper, the era of “greenies” is deemed to run from 1985 through 2005. Therefore, if an observation is in or between 1985 and 2005 it is coded as a 1. If an observation falls outside of this time period it is coded as a 0.

[ix] Interleague play began in 1995 and continues through present. Therefore, if an observation is in or between 1995 and 2013 it is coded as a 1. If an observation falls outside of this time period it is coded as a 0.

[x] The difference in XBPA variable maintains the same basic distribution when the sample is restricted to those with an XBPA equal to or greater than the league average (.0766589), as well as equal to or greater than the Derby participant average (.1138781).

[xi] The mean first half XBPA for those who participate in the Derby is .114, whereas the mean first half XBPA for those who do not participate in the Derby is .077.

[xii] When this model is run including a variable for “home runs squared” the results remain similar.

[xiii] When this model is run including a variable for “home runs squared” the results remain similar.

[xiv] One could quibble with the estimate of 300 second half plate appearances, however, it is important to note that a Derby participant’s second half strikeout total increases over a non-participants strikeout total by .5 for every 100 plate appearances. Thus, if one were to use 200 plate appearances, the difference in average strikeout totals between Derby participants and non-participants for the second half of the season would be about 2.5. Additionally, if one were to use 400 plate appearances, the difference in average strikeout totals between Derby participants and non-participants for the second half of the season would be about 3.5.