The Red Sox Evolve their Swings In-Game and the Results Are Incredible

The Boston Red Sox almost romantic approach to the plate has been one of the major themes on their journey to be the first team with 60 wins. Last night’s expose of producing home runs and precise batting behind Chris Sale’s robotic approach to pitching gave the Red Sox a 10-5 victory over Kansas City Royals for their 60th victory; another notch in a long-chain of accomplishments. More impressively, however, is the Red Sox micro approach to each game. They have not only revolutionized the average statistics played out through the tenure of a season but have revolutionized how they approach the plate inning-by-inning. The romantic plate approach is more than good batting – it is the beginning to a methodical introspection into opposing pitchers for an evolution in innings five and six.

In an interview with 710 ESPN Seattle’s Danny, Dave, and Moore, Seattle Mariners pitcher Marco Gonzales casually remarked of his struggles against the Red Sox on June 24 that they were “taking swings we haven’t seen before.” Gonzales lasted only six innings against the Red Sox, allowing seven hits and five runs on six strikeouts. The fifth inning was the instant the game changed in the Red Sox favor as they scored three.

Naturally, this observation may have been a microcosm dependent on Gonzales’ pitching, not so much the Red Sox. Yet, the observation was enticing enough to warrant investigation. The results were incredible, explaining why the Red Sox meta of plate patience is about more than being disciplined – they pedantically study batters through the first few innings, leading to innings five and six which are destructive.

Before delving into the data, two notations must be established. First, the Red Sox are, on average, destructive regardless of the inning. Their jump in innings five and six are not why they are good, but why the are atop the MLB this year. Second, analytic rise in statistics in innings five and six is a trend across the league; it might be easy to pass on the Red Sox rise as the best batters popping off on ‘third-time through the rotation’ deterioration. Again, however, the Red Sox are using the seemingly inevitable deterioration of pitchers throughout the game and exacerbating on that analytic.

Within innings one through three, the Red Sox hold a .270 batting average with a 20.5 percent strikeout rate, an 8.4 percent walk rate, a .467 SLG, and a 117 wRC+ – all rates which make the Red Sox a top MLB team intrinsically. Stopping here, the Red Sox would be a good team alone. However, as mentioned, the Red Sox jump to great in inning five and six. They post a .292 batting average, only 15.7 percent strikeouts, 7.9 percent walks, a .538 SLG (.240 ISO!), and a wRC+ of 139.

On a micro-level, the functional output has benefited Mitch Moreland and Mookie Betts the most; Moreland has a .808 SLG and Betts has a 234 wRC+. Even Rafeal Devers has a sharp increase in effectiveness in these innings, raising his egregious .198 average from innings one through three to a .304 average in innings five and six.

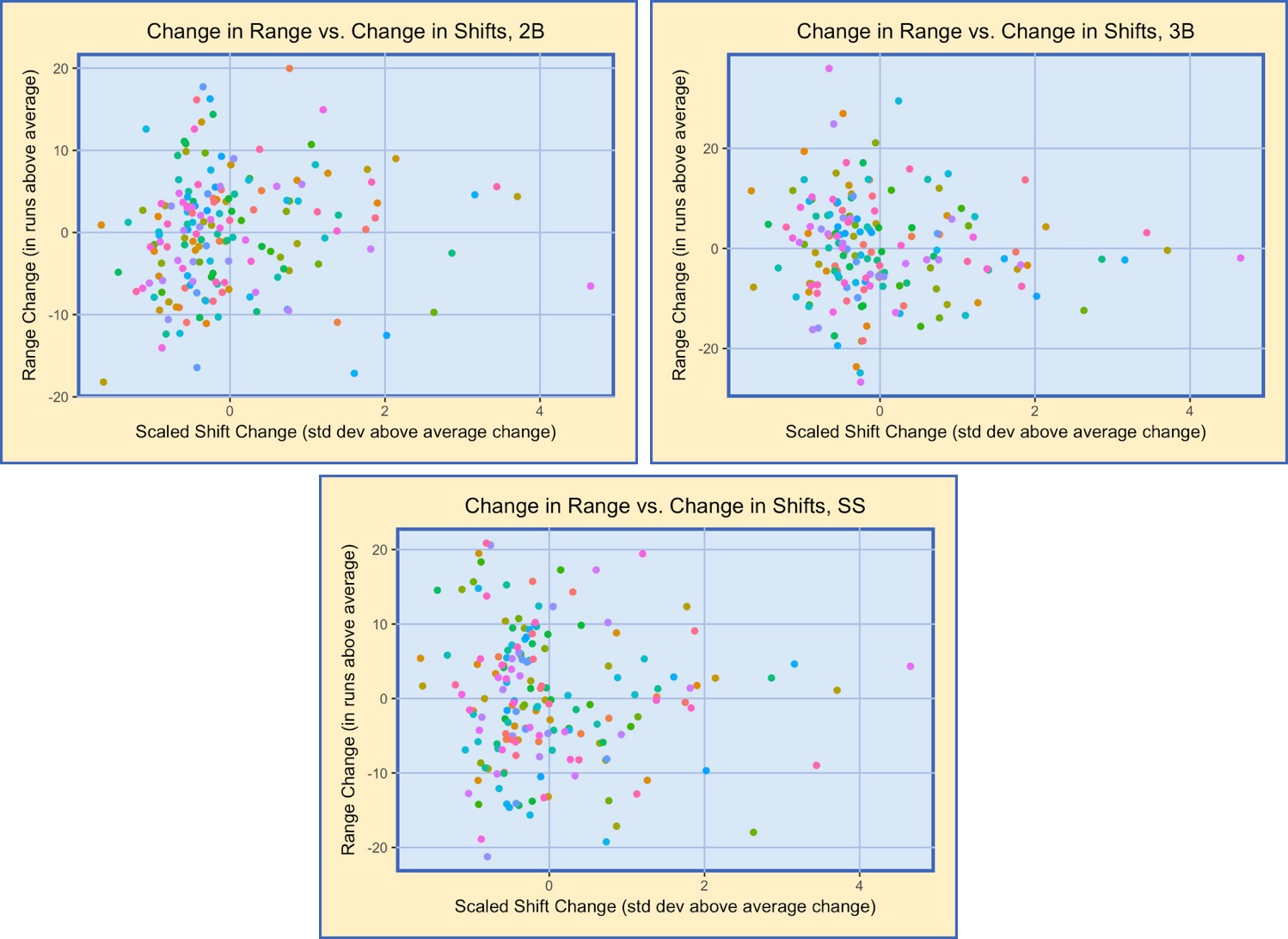

Mechanically, the Red Sox, as a team, change the type of pitches they attack. Produced from Baseball Savant, here is a graphic of the pitch movement attacked in innings one through three; here is the comparative graphic for innings five and six. The graphic shows most of the pitches they take at the beginning of the game have little horizontal movement and trend with more vertical movement – hence, pitches which are easier to see. As the game goes on, they dramatically increase their SLG by attacking pitches with sharp horizontal movement, even hitting low.

In application, it might be said the Red Sox study through the first few innings, waiting to see how pitchers will attack under the guise of movement. Their contact is more studied through this span, evidenced by J.D. Martinez’s expected SLG of .936, Bett’s of .843, and Andrew Benintendi’s of .757. Even Devers sees an increase from an xSLG of .389 to .545.

The Red Sox plate discipline is purposed, thoughtful, and intended for the length of a game and season. They literally improve the quality of swings and contact throughout the game; the maxim of why analytical discipline is important to success.

‘

‘