Hierarchical Clustering For Fun and Profit

Player comps! We all love them, and why not. It’s fun to hear how Kevin Maitan swings like a young Miguel Cabrera or how Hunter Pence runs like a rotary telephone thrown into a running clothes dryer. They’re fun and helpful, because if there’s a player we’ve never seen before, it gives us some idea of what they’re like.

When it comes to creating comps, there’s more than just the eye test. Chris Mitchell provides Mahalanobis comps for prospects, and Dave recently did something interesting to make a hydra-comp for Tim Raines. We’re going to proceed with my favorite method of unsupervised learning: hierarchical clustering.

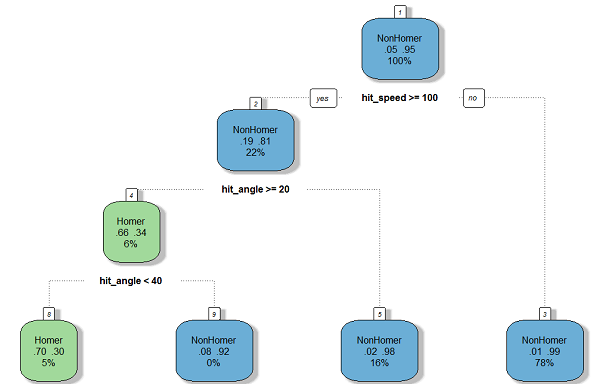

Why hierarchical clustering? Well, for one thing, it just looks really cool:

That right there is a dendrogram showing a clustering of all player-seasons since the year 2000. “Leaf” nodes on the left side of the diagram represent the seasons, and the closer together, the more similar they are. To create such a thing you first need to define “features” — essentially the points of comparison we use when comparing players. For this, I’ve just used basic statistics any casual baseball fan knows: AVG, HR, K, BB, and SB. We could use something more advanced, but I don’t see the point — at least this way the results will be somewhat interpretable to anyone. Plus, these stats — while imperfect — give us the gist of a player’s game: how well they get on base, how well they hit for power, how well they control the strike zone, etc.

Now hierarchical clustering sounds complicated — and it is — but once we’ve made a custom leaderboard here at FanGraphs, we can cluster the data and display it in about 10 lines of Python code.

import pandas as pd

from scipy.cluster.hierarchy import linkage, dendrogram

# Read csv

df = pd.read_csv(r'leaders.csv')

# Keep only relevant columns

data_numeric = df[['AVG','HR','SO','BB','SB']]

# Create the linkage array and dendrogram

w2 = linkage(data_numeric,method='ward')

labels = tuple(df.apply(lambda x: '{0} {1}'.format(x[0], x[1]),axis=1))

d = dendrogram(w2,orientation='right',color_threshold = 300)

Let’s use this to create some player comps, shall we? First let’s dive in and see which player-seasons are most similar to Mike Trout’s 2016:

| Season | Name | AVG | HR | SO | BB | SB |

| 2001 | Bobby Abreu | .289 | 31 | 137 | 106 | 36 |

| 2003 | Bobby Abreu | .300 | 20 | 126 | 109 | 22 |

| 2004 | Bobby Abreu | .301 | 30 | 116 | 127 | 40 |

| 2005 | Bobby Abreu | .286 | 24 | 134 | 117 | 31 |

| 2006 | Bobby Abreu | .297 | 15 | 138 | 124 | 30 |

| 2013 | Shin-Soo Choo | .285 | 21 | 133 | 112 | 20 |

| 2013 | Mike Trout | .323 | 27 | 136 | 110 | 33 |

| 2016 | Mike Trout | .315 | 29 | 137 | 116 | 30 |

Remember Bobby Abreu? He’s on the Hall of Fame ballot next year, and I’m not even sure he’ll get 5% of the vote. But man, take defense out of the equation, and he was Mike Trout before Mike Trout. The numbers are stunningly similar and a sharp reminder of just how unappreciated a career he had. Also Shin-Soo Choo is here.

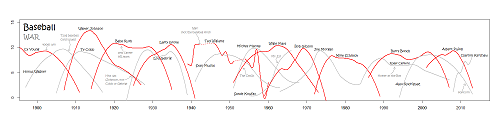

So Abreu is on the short list of most underrated players this century, but for my money there is someone even more underrated, and it certainly pops out from this clustering. Take a look at the dendrogram above — do you see that thin gold-colored cluster? In there are some of the greatest offensive performances of the past 20 years. Barry Bonds’s peak is in there, along with Albert Pujols’s best seasons, and some Todd Helton seasons. But let’s see if any of these names jump out at you:

First of all, holy hell, Barry Bonds. Look at how far separated his 2001, 2002 and 2004 seasons are from anyone else’s, including these other great performances. But I digress — if you’re like me, this is the name that caught your eye:

| Season | Name | AVG | HR | SO | BB | SB |

| 2000 | Brian Giles | .315 | 35 | 69 | 114 | 6 |

| 2001 | Brian Giles | .309 | 37 | 67 | 90 | 13 |

| 2002 | Brian Giles | .298 | 38 | 74 | 135 | 15 |

| 2003 | Brian Giles | .299 | 20 | 58 | 105 | 4 |

| 2005 | Brian Giles | .301 | 15 | 64 | 119 | 13 |

| 2006 | Brian Giles | .263 | 14 | 60 | 104 | 9 |

| 2008 | Brian Giles | .306 | 12 | 52 | 87 | 2 |

Brian Giles had seven seasons that, according to this method at least, are among the very best this century. He had an elite combination of power, batting eye, and a little bit of speed that is very rarely seen. Yet he didn’t receive a single Hall of Fame vote, for various reasons (short career, small markets, crowded ballot, PED whispers, etc.) He’s my vote for most underrated player of the 2000s.

This is just one application of hierarchical clustering. I’m sure you can think of many more, and you can easily do it with the code above. Give it a shot if you’re bored one offseason day and looking for something to write about.