Batted Balls and Adam Eaton’s Throwing Arm

Adam Eaton, he of 6 WAR, is now on the Nationals and there is a lot of discussion happening regarding that. It would seem that maybe 2 – 3 of those WAR wins are attributable to his robust defensive play in 2016. 20 DRS!

In Dave Cameron’s article “Maybe Adam Eaton Should Stay in Right Field,” Dave points out that Eaton led MLB with 18 assists and added significant value by “convincing them not to run in the first place.”

What Dave and most of the other defensive metrics that I’ve seen on the public pages tend to ignore is the characteristics of the ball in play, i.e. fielding angle and exit velocity, and these impacts on the outfielders performance. So with only a bit of really good Statcast data I understand this is still hard to do, but it’s time to start. You can easily envision that balls hit to outfielders in different ways (i.e. launch angle and velocity) can result in different outfield outcomes. Whether it is the likelihood of an out being made on that ball in play, or whether it is how that ball interacts with runners on base. Ignoring this data has nagged me for a while now, as I love to play with the idea of outfield defense (just look at my other community posts).

So can some of these stats explain Adam Eaton’s defensive prowess this season? Maybe it’s possible. I had downloaded all the outfield ball-in-play data from the 2016 Statcast search engine so I fired it up. I have cleaned the data up to include the outfielder name and position for each play. Using this I can filter the data for the situation Dave describes, which is:

A single happens to right field with a runner on first base.

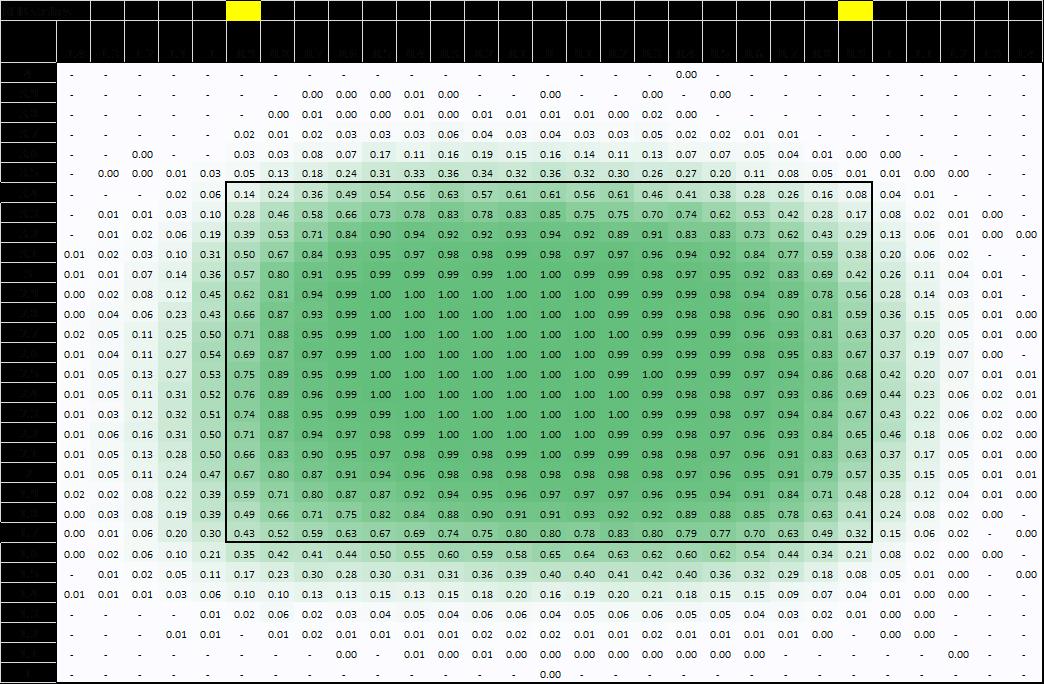

Before we go into the individual outfielders, let’s look in general:

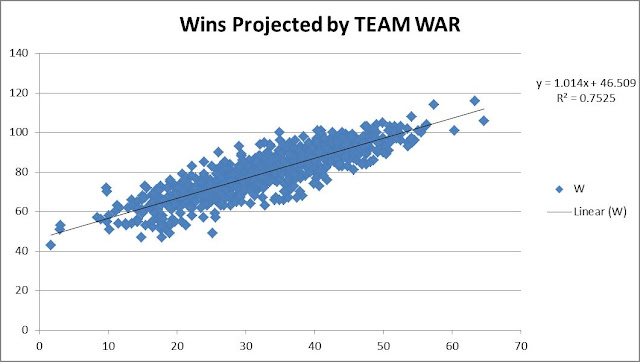

By looking in general at the plays, you can see that a player is significantly less likely to advance from 1st to 3rd on a single to right field if the ball is hit at 5 degrees vs 15 degrees. It’s nearly double from ~20% at 5 degrees to ~40% at 15 degrees. Wow. That’s huge, and with an R-squared of nearly 50%, we’re talking half of the decision to go from 1st to 3rd can be tied to the launch angle. (The chart is basically parabolic if you go to the negative launch angles which do appear in the data set, but with much less frequency, which is why I removed those data points. But it makes sense that it would be way.)

I did this same analysis using exit velocity and it wasn’t nearly as conclusive, though there was a trend downward, i.e guys were less likely to advance on singles hit at 100mph then they were for singles hit at 60 mph. The r-squared was ~13%.

So now that we see that the angle the BIP comes to the outfield can make a big difference, who were the lucky recipients in the outfield of runner-movement-prevention balls in play? When filtered to remove anybody who made fewer than 20 of this type of play, you end up with Eaton at No. 2 with an average angle of 4.44 (Bryce Harper, his now-teammate and also mentioned in Dave’s article in conjunction with his similarly excellent runner-movement-prevention, comes in at No. 3. Possibly not a coincidence.)

You may notice my total number of plays for Eaton doesn’t match the total referenced by Dave per Baseball-Reference. I filtered out the plays where Eaton was in center field (which were several). I believe that my analysis from the Statcast data had Eaton with 48 plays of this type (I think Dave’s article mentioned 52 per BR? Not sure what the difference is).

So in conclusion, I do think it’s very possible that Adam Eaton’s defensive numbers this past season, in particular with regards to his “ARM” scoring, could have been dramatically influenced in a positive direction simply by the balls that were hit to him and the angle they came. Clearly this is something he has absolutely no control over whatsoever and it could fluctuate to another direction entirely next year. I do think this area of analysis, in particular for outfield plays, whether it’s catches, assists, or even preventing advancement for runners, is a very ripe field for new approaches which in time should give us a much better idea of players’ defensive value.

That said, in this simple analysis the angle only accounted for ~50% of that runner-movement-prevention and that still leaves arm strength and accuracy as likely significant contributors, both of which I believe Eaton excels at. And of course he did throw all those guys out. So Eaton should be fine, likely well above average, but just don’t expect those easy singles to keep coming to him.