Grading 2013 AL SP Performance with Attention to the 2-D Direction of Batted Balls

Foreword

Two years ago, I began developing a system for evaluating the performance of minor-league pitchers relative to their minor-league level/league peers. My goals were to use only game data that could be extracted from the MLB Advanced Media Gameday archives for every level of the minors (ruling out any of the pitch-outcome data that is available for AA and AAA games), to ignore whether batted balls went for hits or home runs, and to ignore runs allowed. In brief, the challenge amounts to using whatever else information can be compiled from the game-specific dataset to arrive at the best approximation of the pitcher’s true performance, as judged independent of those factors which tend to fall outside their control (defense, park effects, etc.). What eventually follows are the results of applying the latest iteration of this “Fielding and Ballpark Independent Outcomes” method to 2013’s American League starting-biased pitchers.

Basic Steps of Applying the Method to a League

- Download the relevant details of every plate appearance (PA) from the league’s season into a spreadsheet/database

- Derive a 24-outs-baserunners-state run expectancy matrix à la Tango in The Book

- Quantify how each PA of the season impacted the inning’s run expectancy

- Exclude all bunts and foulouts, plus every PA taken by a pitcher

- Reweight the proportion of line drives (LD), outfielder fly balls (OFFB), ground balls (GB), and infielder flyballs (IFFB) by ballpark to offset any stadium- or stringer-related anomalies in play event classifications

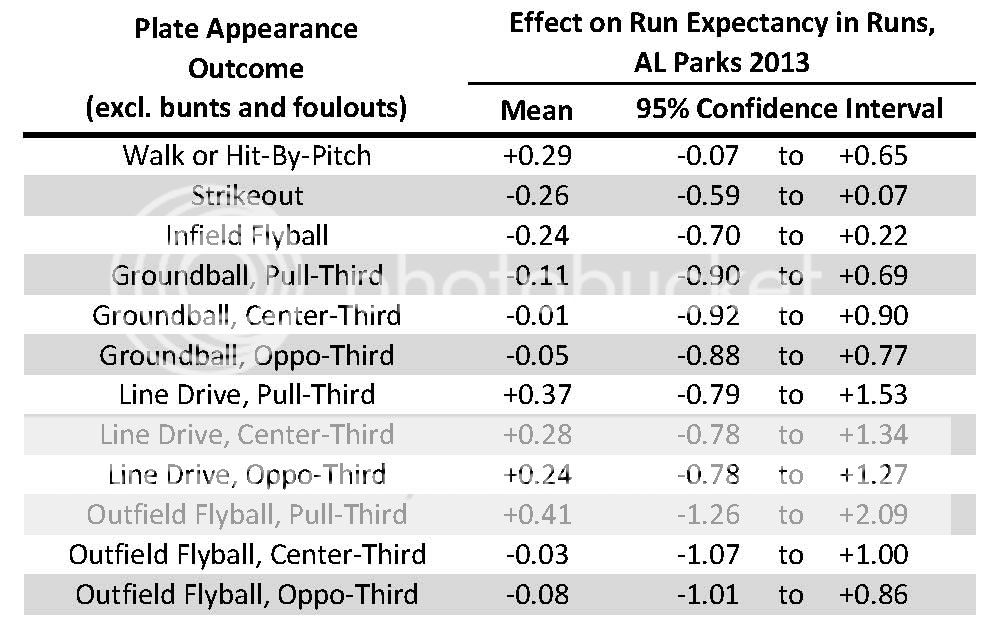

- Referencing the run-expectancy value determined for each PA in Step 3, the corresponding basic description of the play (BB vs HBP vs K vs GB vs IFFB vs OFFB vs LD), and the 2 coordinates indicating where the batted ball was fielded (if there was one), quantify what each of the following 12 general PA event types were worth in terms of runs, on average, for the season: 1) walk or hit-by-pitch, 2) strikeout, 3) IFFB, 4) GB to batter’s pull-field-third of the diamond, 5) GB to batter’s center-field-third, 6) GB to batter’s opposite-field-third, 7) LD to pull-third, 8) LD to center-third, 9) LD to opposite-third, 10) OFFB to pull-third, 11) OFFB to center-third, and 12) OFFB to opposite-third.

- For each pitcher in the study sample, tally up the number of each of the 12 event types that they allowed and in each instance charge them with the exact number of runs determined in Step 6 for the corresponding event type; divide the resulting sum by the total number of events to arrive at a single number for each pitcher that quantifies how a PA against them that season should have affected the inning’s run expectancy, on average (the more negative this number the better the pitcher should have performed on the year)

- Quantify how high or low the pitcher rated on the value in Step 7 versus the mean of the sample on a standard deviation (SD) basis

What were the 12 Event Types Worth in 2013?

The table below shows how the studied event types impacted run expectancy in AL Parks during 2013, on average. The 2-D direction of the batted ball does tend to be rather consequential for LD and even more so for OFFB.

So as far as Step 7 described above goes, each pitcher in what follows will be charged +0.29 runs for every BB and HBP, -0.26 runs for every K, … and -0.08 runs for every OFFB to the Opposite-Field-Third, with that sum ultimately divided by the total number of PA events to arrive at a single number that quantifies what an average PA against the pitcher in 2013 was worth in terms of runs (per run expectancies). Think of that as the equation being used to evaluate each pitcher’s performance.

Study Sample

The 101 pitchers who faced more than 200 batters as an American Leaguer in 2013 while averaging more than 10 batters faced per game. Data they accumulated as relievers is included in the analysis. Data they accumulated as National Leaguers is not. As before, any PA that resulted in a bunt or foulout or that was taken by a pitcher was excluded.

Scores Computed

The overall rating number described in Step 8 above is termed Performance Score. Steps 7 and 8 can be repeated with the non-batted-ball events (BB,HBP,K) stricken from the numerator and denominator at Step 7, and this result is termed Batted Ball Subscore (in short, how should the pitcher have rated versus their peers on batted balls?). To further understand how the pitcher achieved their Performance Score, a Control Subscore (how many SDs high or low was the pitcher’s BB+HBP% versus the study population’s mean?) and a Strikeout Subscore (how many SDs high or low was the pitcher’s K% ?) are computed. An Age Score is also calculated that quantifies how young the pitcher was versus the population’s mean age, per SDs. Given the method’s minor-league origins, the scores are typically expressed on a 20-to-80 style scouting scale where 50 is league-average, scores above 50 bettered league-average, and any 10 points equates to 1 SD (percentiles will be listed for those who prefer them).

2013 American League Starting Pitcher Results

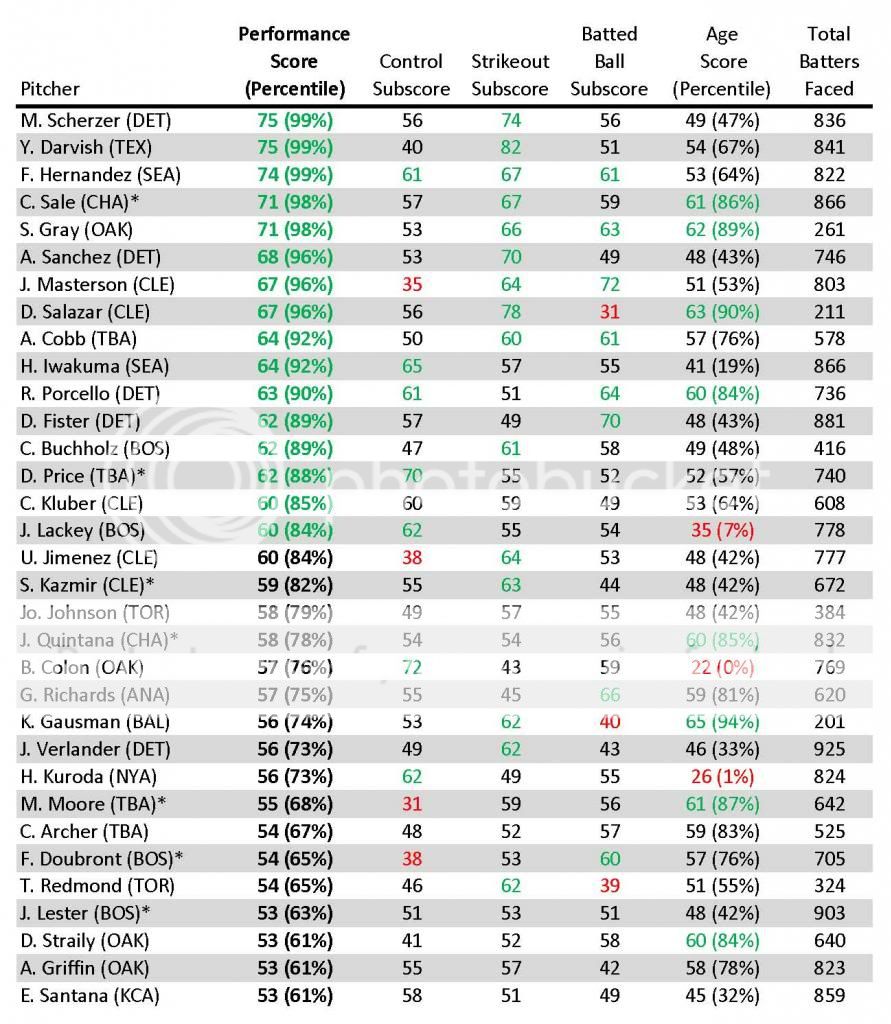

In the tables to follow, green text indicates a value that beat league-average by at least 1 SD (“very good”) while red text indicates a value that trailed league-average by at least 1 SD. Asterisks indicate left-handed throwers.

Sorting by Performance Score

Here are the Top 33 2013 AL SP per the Performance Score measure. Scherzer edged Darvish for the #1 spot as the top of the list somewhat mimicked the BBWAA’s Cy Young vote.

Detroit and Cleveland each landed five in the Top 33 while Boston, Oakland, and Tampa Bay each placed four. Perhaps not coincidentally, those clubs were also the playoff teams.

And below are the Middle 34 by Performance Score.

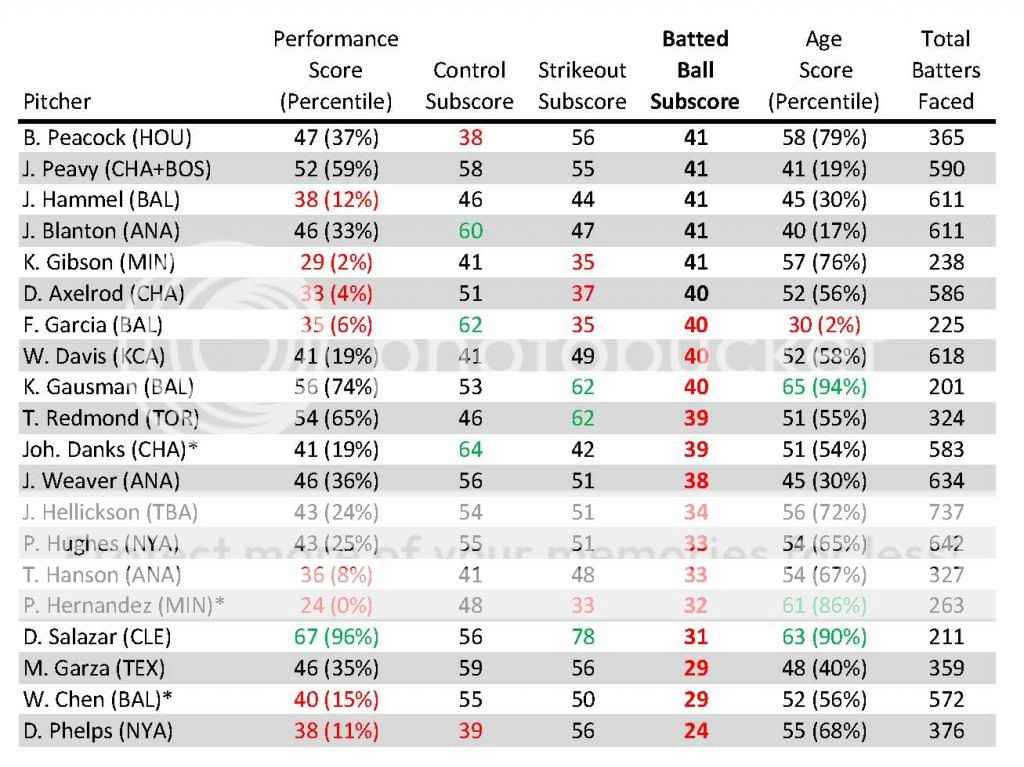

And below are the Bottom 34 by Performance Score.

Pedro Hernandez took last place by a comfortable margin as five other Twins joined him on this dubious list of 34. To further corner the market on these sorts of arms, the club has since inked another of the 34 to a three-year free-agent contract.

Sorting by Batted Ball Subscore

Given the system’s unique weighting of batted-ball types by direction, let us examine how the pitchers grade out on this metric. Below are the Top 20 sorted by Batted Ball Subscore. Masterson nosed out Deduno for top honors. Here, the Twins fare better as three besides Deduno crack the Top 20.

One unique angle of this approach is that a pitcher can be a relatively strong batted-balls performer without being a noteworthy groundball-inducer if their outfield flyballs, line drives, and groundballs are skewed optimally to the least dangerous zones of the field per the batter’s handedness. Colon serves as a prime example of such a pitcher.

Below are the laggards who comprise the Bottom 20.

Garza’s 29 number as an American Leaguer is somewhat scary for the sort of money he’s likely to command as a free agent (he’d earn about a 35 Batted Ball Subscore if the Cubs NL data were factored in). Salazar’s numbers show how a very high rate of strikeouts and good control can successfully offset a dangerous distribution of batted balls by type and direction.

Admittedly, there is a third dimension to each of these batted balls (launch angle off the bat relative to the plane of the field) that would stand to further improve the batted-balls assessment if such information were available.

Other Directions

A variety of things can be done with these numbers, such as breaking them down further into LHB values and RHB values, identifying comparable pitchers who share similar subscores (MLBers to MLBers, MiLBers to MLBers), studying how these values evolve as the minor leaguer rises through the farm towards the majors and their predictive value as to future MLB performance, and so on. And then there’s also the reverse analysis — evaluating hitter performance under a similar lens.

On Tap

Perhaps the most intriguing research question that application of this system raises is, “Would advanced metrics familiarly used to grade pitcher performance yield better results if their equations included batted-ball directional terms?” As a first attempt to test those waters, I plan to follow this up with a post that shows how these results compare to those obtained by variants of more familiar advanced statistical-evaluation methods (SIERA, FIP, etc.). In the interim, I welcome whatever comments, criticisms, and suggestions this readership has to offer.