Dr. Hendricks and Mr. Gray

Randomness and circumstances are important driving forces in everything that happens in the world. Although they usually work hand in hand with our own actions and decisions, they have the ability to pick you up when you hit the jackpot at the casino, or throw you down when your car gets crushed by a falling tree (hopefully you’re comfortably sleeping in your bed when that happens). They can also be the difference between a pitcher having an average season on the mound, and having an outstanding one. Such is the case with the seasons Jon Gray and Kyle Hendricks had this year.

I’m not going to make the argument that these two pitchers performed equally well this season, with the main differences being random chance and circumstances, because they didn’t. Hendricks was the better pitcher; it just wasn’t the 2.48-run difference their ERAs show. The similarities between the two performances can be summarized in basically two stats. If we take a look at xFIP and SIERA (two important ERA estimators available here at FanGraphs), Hendricks’ numbers of 3.59 and 3.70, respectively, are eerily similar to Gray’s 3.61 and 3.72. From there on, however, the numbers separate abruptly.

Much like Dr. Jekyll and Mr. Hyde represent the good and the bad within a person, Hendricks’ and Gray’s seasons represent two sides of the same coin. On the one hand, circumstantial factors and good fortune turned Hendricks’ very good performance into a historical season, while a different set of circumstances and some bad fortune turned Gray’s good performance into merely an average one. In this piece, we’ll take a look at the factors that influenced these diametrically opposed results.

I’ll start by saying that Kyle Hendricks had a remarkable and impressive season. He had an average strikeout rate (8.05 K/9), didn’t walk many batters (2.08 BB/9), and allowed very few longballs (0.71 HR/9), which resulted in a really good 3.20 FIP, which ranked 4th in the majors. His ERA, however, ended up all the way down to 2.13; a whopping 1.07 runs less than his FIP. Despite being a big difference, it’s not all that uncommon, as nearly 2% of individual seasons by starters in the history of the game have had an E-F (ERA minus FIP) of -1.07 or lower. Nonetheless, that difference is hardly sustainable through multiple seasons. In major-league history, out of 2259 pitchers with at least 500 innings pitched, only two had a career E-F below -1.00, and both of them were full-time relievers (in case you’re curious, they are Alan Mills and Al Levine).

On the other side of the spectrum, Jon Gray also had a very solid season. He had an outstanding 9.91 strikeouts per 9 innings (that ranked him 9th among qualifying starters), an average walk rate of 3.16 BB/9, and a solid home-run rate (0.94 HR/9), lower than league average despite pitching half of his innings at Coors Field. His performance was good enough for a 3.60 FIP, but his actual ERA rocketed to 4.61. This 1.01 positive difference is just as unusual as Hendricks’ negative one, as about 2% of individual seasons throughout history have resulted in differences of 1.01 or higher. For visualizing purposes, here’s a table summarizing both pitchers’ numbers.

So the question still remains: what were the determining factors in these two pitchers having such a massive difference in results? Let’s dive right into it.

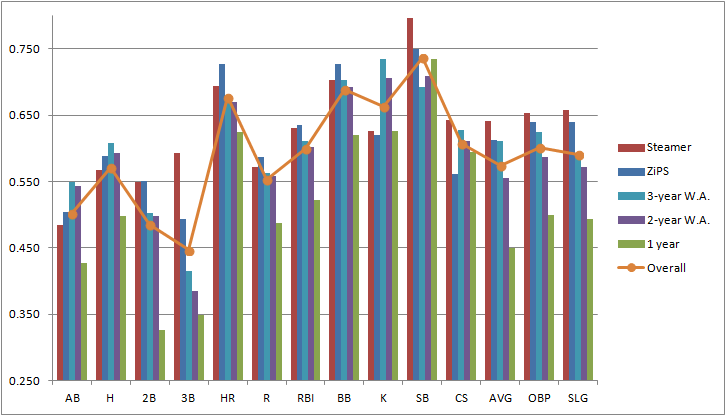

First of all, I decided to look at the correlation factors between E-F and a wide array of pitching stats, using data from every pitcher in MLB history with 500+ innings. As a general rule of thumb, a correlation factor between 0.40 and 0.69 indicates a strong relationship between the two variables. The following table shows the stats that had at least a 0.40 correlation factor with E-F:

Welp, that’s a pretty lame table. Keep in mind, I analyzed correlations for stats as varied as pitch-type percentages, pitch-type vertical and horizontal movements, and Soft, Medium, and Hard-hit rates, as well as K, BB, and HR per 9, or HR/FB%. None of those had even a moderate relationship with E-F. So let’s stick with the stats presented on the table.

The first two stats are really no surprise. FIP basically assumes league-average BABIP and LOB% to estimate what a pitcher’s ERA should look like. So, if a pitcher has a high BABIP, FIP is going to estimate a lower ERA than the actual one, resulting in a higher E-F; thus the positive correlation. On the other hand, if a pitcher has a higher LOB%, he’ll allow fewer runs than his FIP would suggest, resulting in a lower E-F. This explains the negative correlation shown in the table. The last stat, however, came as a real surprise, at least for me. ERA seems to be positively correlated with E-F, which means that pitchers with higher ERA tend to have higher E-F than pitchers with lower ERA.

The next logical step would be to determine which factors, if any, explain BABIP and/or LOB% among pitchers. Using the same pitching stats than in the previous step, I ran correlations with BABIP and LOB% separately. The following table shows the stats that had a strong (0.40 to 0.69) or moderate (0.30 to 0.39) relationship.

As was the case in the first table, both of these stats are correlated strongly with E-F, showing factors of 0.58 and -0.42, respectively. It doesn’t come as a shock either, that they are strongly correlated with each other. The negative correlating factor (-0.42) indicates, as you would expect, that a high BABIP leads to a low LOB%, and vice versa. On the BABIP side, a positive strong relationship with ERA is almost too obvious, as more balls in play falling for hits leads to more runs being scored. Also, since fly balls in play (not counting home runs) turn more often into outs than ground balls do, it makes sense that BABIP holds a negative relationship with the former, and a positive one with the latter. This fact, however, goes against a somewhat popular belief that ground-ball pitchers tend to have lower BABIPs.

The factors that correlate to LOB% are more interesting. The first one is not unexpected: a higher strikeout rate seems to lead to more runners getting stranded, and that’s a pretty easy concept to wrap your head around. The second one, however, is really mind-boggling, and I really can’t say I can find a reasonable explanation for it. It indicates that the higher the home-run rate allowed by a pitcher, the more runners are going to be left on base. It is quite possible that this is just a spurious correlation, having no causality at all. Finally, the last factor listed on the table is very interesting and useful in this particular case. It suggests that high percentages of soft contact lead to higher LOB%. We’ll get to that later on in this article.

So let’s go back to our pitchers and check if any of this makes sense. We know that E-F is mainly affected by BABIP and LOB%. Hendricks and Gray had very different numbers in these two stats. The Cubs’ righty had a .250 BABIP and a LOB% of 81.5, while the Rockies’ fireballer had .308 and 66.4%. Considering that the league averages were .298 and 72.9%, respectively, we can say that Hendricks did considerably better than average, while Gray did just the opposite. So far so good, right? These facts go a long way towards explaining the differing outcomes. However, BABIP and LOB% aren’t exactly pitcher-dependent; in fact, they’re the marquee stats for the generic term “luck.”

Looking at the stats from the second table, few of them help out in figuring this out. High strikeout rates, for example, are supposed to increase LOB%, but Gray still managed a really low 66.4% despite a 9.91 K/9. On the other hand, Hendricks’ 81.5% LOB ranked 5th among qualified starters, even though his strikeout rate of 8.05 was right around league average. Similarly, groundball percentage is shown to have a positive correlation with BABIP. Nonetheless, Hendricks’ higher-than-average rate of 48.4% (league average was 44.7%) resulted in a ridiculously low BABIP of .250, while Gray’s below-average rate of 43.5% came with a .308 BABIP. Almost the same thing happens when you look at the fly-ball rates.

The only factor from that second table that does make sense in these particular examples is soft-contact rate. Hendricks ranked 1st in this regard among qualified starters, with an impressive 25.1% (league average was 18.8%), while Gray had a below-average rate of 17.8%, which ranked him 50th out of 73 qualified starters. This stat is very much pitcher-dependent, and it does help explain some of the differences in LOB%. It has, however, a moderate relationship with LOB%, as evidenced by its factor of -0.37. Is that enough to account for the massive difference in the results? Intuitively, I’ll say no. There is one more factor, however, that we haven’t even discussed yet.

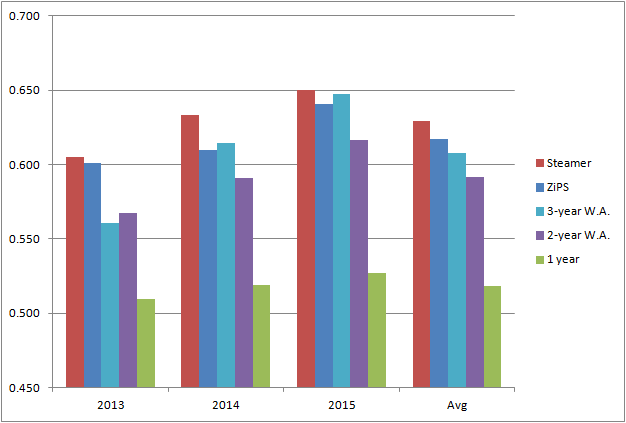

FIP stands for Fielding Independent Pitching, so the very thing that FIP is trying to subtract from the equation might hold the key to answering our question. Defensive performances can heavily influence the outcome of the game, and make up a big chunk of what we generally call “luck” in a pitcher’s final results. In order to have a numerical confirmation of this idea, I looked at the correlations between teams’ yearly defensive component of WAR and its staff’s BABIP, LOB%, and E-F. The data I used for this exercise was every individual team season from 1989 (the first year in which play-by-play data contained information on hits and outs location) to 2016.

We can see here that a team’s defense has a strong correlation with all three of the stats, especially E-F. Higher values of the defensive component of WAR lead to lower BABIP, higher LOB%, and lower E-F, just as you would expect.

Saying that the Cubs had a great defensive performance this year is an understatement. Not only was it the best defense in 2016 by a bunch — it was also the best defense of the last 17 years, according to FanGraphs’ defensive component of WAR. Of the 814 individual team seasons played in MLB since 1989, this year’s Cubs rank 8th. That’ll put a serious dent on opponents’ BABIP. In fact, the Cubs’ average on balls in play of .255 (yes, that is the whole pitching staff’s BABIP) is the absolute lowest since the ’82 Padres. Oh, and also the Cubs pitching staff’s LOB% of 77.5% is tied for 2nd highest since 1989. All of this adds up to a team E-F of -0.62. Wow. Just wow.

The Rockies defense, on the other hand, wasn’t bad, but it also wasn’t great. According to FanGraphs, it was 17.9 runs above average, which ranked 12th in MLB. Again, that’s really not bad at all, just miles away from the 115.5 runs above average the Cubs had. The Rockies’ staff as a whole had a .317 BABIP, and a 68.0% LOB%; not unexpected from a team that plays half their games at altitude. Still, both of these values are worse than league average, resulting in a team E-F of 0.54.

All in all, Kyle Hendricks still had a better season than Jon Gray, and people will remember the 2.13 ERA and not the 4.61. This analysis just puts it a little bit more in perspective, and helps shed some light on the little details that make big differences in the course of a long season.

The old football adage says that “defense wins championships.” That doesn’t really apply to baseball, but in the future, when I think back to the 2016 Cubs, I’ll definitely think about their defense.